Manual testing concepts course 1

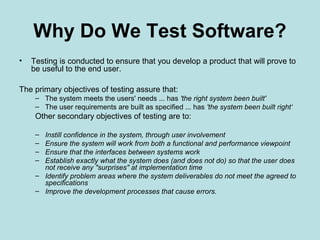

- 1. Why Do We Test Software? • Testing is conducted to ensure that you develop a product that will prove to be useful to the end user. The primary objectives of testing assure that: – The system meets the users' needs ... has 'the right system been built' – The user requirements are built as specified ... has 'the system been built right‘ Other secondary objectives of testing are to: – Instill confidence in the system, through user involvement – Ensure the system will work from both a functional and performance viewpoint – Ensure that the interfaces between systems work – Establish exactly what the system does (and does not do) so that the user does not receive any "surprises" at implementation time – Identify problem areas where the system deliverables do not meet the agreed to specifications – Improve the development processes that cause errors.

- 2. • Testing is the systematic search for defects in all project deliverables • Testing is a process of verifying and/or validating an output against a set of expectations and observing the variances.

- 3. Verification : Verification ensures that the system complies with an organization’s standards and processes, relying on review or non-executable methods Did we build the right system Validation: ensures that the system operates according to plan by executing the system functions through a series of tests that can be observed and evaluated “Did we build the system right?” Variances: deviations of the output of a process from the expected outcome. These variances are often referred to as defects.

- 4. • Testing is a Quality Control Activity. Quality has two working definitions: Producer’s Viewpoint – The quality of the product meets the requirements. Customer’s Viewpoint – The quality of the product is “fit for use” or meets the customer’s needs.

- 5. Quality assurance: is a planned and systematic set of activities necessary to provide adequate confidence that products and services will conform to specified requirements and meet user needs • Estimation processes • Testing processes and standards Quality Control Quality control is the process by which product quality is compared with applicable standards, and the action taken when nonconformance is detected Quality control activities focus on identifying defects in the actual products produced.

- 6. Testing Approaches Static Testing: is a detailed examination of a work product's characteristics to an expected set of attributes, experiences, and standards Some representative examples of static testing are: – Requirements walkthroughs or sign-off reviews – Design or code inspections – Test plan inspections – Test case reviews Dynamic Testing process of verification or validation by exercising (or operating) a work product under scrutiny and observing its behavior to changing inputs or environments , it is executed dynamically to test the behavior of its logic and its response to inputs

- 7. Project Life Cycle Models Popular Life cycle models • V & V Life Cycle Model • Waterfall life Cycle Model

- 8. V &V ! IT Infrastructure Development and Maintenance Interface Control Requirements Application Development and Maintenance Roll-Out,Training andImplementation Business ProcessesRe- Engineering Operability Test User Acceptance Test (UAT) Systems Integration Test (Solution Level) Integration Test (Component Level) Unit Test Build / Code Business Requirements System Requirements Solution Requirements Component Requirements Detailed Design Application / Function Requirements Integration VerificationValidation Requirem entsDevelopm ent& Managem ent System Test (Application Level)

- 10. Objectives To verify that the stated requirements meet the business needs of the end-user before the external design is started To evaluate the requirements for testability When After requirements have been stated Input Detailed Requirements Output Verified Requirements Who Users & Developers Methods Static Testing Techniques Checklists Requirements Testing Requirements testing involves the verification and validation of requirements through static and dynamic tests

- 11. Objectives To verify that the system design meets the agreed to business and technical requirements before the system construction begins To identify missed requirements When After External Design is completed After Internal Design is completed Input External Application Design Internal Application Design Output Verified External Design Verified Internal Design Who Business Analysts & Developers Methods Static Testing Techniques Checklists Design Testing Design testing involves the verification and validation of the system design through static and dynamic tests. The validation testing of external design is done during User Acceptance Testing and the validation testing of internal design is covered during, Unit, Integration and System Testing

- 12. Objectives To test the function of a program or unit of code such as a program or module To test internal logic To verify internal design To test path & conditions coverage To test exception conditions & error handling After modules are coded Input Detail Design or Technical Design &Unit Test Plan Output Unit Test Report Who Developers Methods Debug Code Analyzers Path/statement coverage tools Unit Testing Unit level test is the initial testing of new and changed code in a module. It verifies the program specifications to the internal logic of the program or module and validates the logic

- 13. Objectives To technically verify proper interfacing between modules, and within sub-systems Input Detail Design & Compound Requirements & Integration Test Plan Output Integration Test report Who Developers When After modules are unit tested Methods White and Black Box techniques Integration Testing Integration level tests verify proper execution of application components and do not require that the application under test interface with other applications . Communication between modules within the sub-system is tested in a controlled and isolated environment within the project

- 14. Objectives To verify that the system components perform control functions To perform inter-system test To demonstrate that the system performs both functionally and operationally as specified To perform appropriate types of tests relating to Transaction Flow, Installation, Reliability, Regression etc. Input Detailed Requirements & External Application Design Master Test Plan & System Test Plan Output System Test Report Who System Testers When After Integration Testing System Testing System level tests verify proper execution of the entire application components including interfaces to other applications. Both functional and structural types of tests are performed to verify that the system is functionally and operationally sound.

- 15. Objectives To test the co-existence of products and applications that are required to perform together in the production-like operational environment (hardware, software, network) To ensure that the system functions together with all the components of its environment as a total system To ensure that the system releases can be deployed in the current environment Input Master Test Plan Systems Integration Test Plan Output Systems Integration Test report Who System Tests When After system testing Systems Integration Testing Systems Integration testing is a test level which verifies the integration of all applications, including interfaces internal and external to the organization, with their hardware, software and infrastructure components in a production-like environment

- 16. Objectives To verify that the system meets the user requirements Input Business Needs & Detailed Requirements Master Test Plan User Acceptance Test Plan Output User Acceptance Test report Who Customer When After system testing/System Integration Test User Acceptance Testing User acceptance tests (UAT) verify that the system meets user requirements as specified

- 17. Test Estimation There are 3 Estimation Techniques • Top-Down Estimation • Expert Judgment • Bottom-Up Estimation Estimation

- 18. Top-Down Estimation: the initial stages of the project and is based on similar projects. Past data plays an important role in this form of estimation. Function Points Model:- Function points (FP) measure the size in terms of the amount of functionality in a system. Expert Judgment :If someone has experience in certain types of projects their expertise can be used to estimate the cost that will be incurred in implementing the project. Bottom-Up Estimation This cost estimate can be developed only when the project is defined as in a baseline. The WBS (Work Breakdown Structure) must be defined and scope must be fixed. The tasks can then be broken down to the lowest level and a cost attached to each This can then be added up to the top baselines thereby giving the cost estimate

- 19. Test Strategy Who (in generic terms) will conduct the testing The methods, processes and standards used to define, manage and conduct all levels of testing of the application Level of Testing is in Scope/Out of Scope Types of Projects does Application will support Test Focus Test Environment strategy Test Data strategy Test Tools Metrics OATS

- 20. Test Plan • A document prescribing the approach to be taken for intended testing activities. The plan typically identifies the items to be tested, the test objectives, the testing to be performed, test schedules, entry / exit criteria, personnel requirements, reporting requirements, evaluation criteria, and any risks requiring contingency planning. • What will be tested. • How testing will be performed. • What resources are needed, • The test scope, focus areas and objectives; • The test responsibilities; • The test strategy for the levels and types of test for this release; • The entry and exit criteria; • Any risks, issues, assumptions and test dependencies; • The test schedule and major milestones; MTP

- 21. Test Techniques White Box Testing Evaluation techniques that are executed with the knowledge of the implementation of the program. The objective of white box testing is to test the program's statements, code paths, conditions, or data flow paths How it has done not what is done Identify all decisions, conditions and paths Black box Testing Evaluation techniques that are executed without knowledge of the program’s implementation. The tests are based on an analysis of the specification of the component without reference to its internal workings. What is Done not on How it is Done Equivalence Partitioning Equivalence partitioning is a method for developing test cases by analyzing each possible class of values. In equivalence partitioning you can select any element from • Valid equivalence class • Invalid equivalence class

- 22. Boundary Value Analysis Boundary value analysis is one of the most useful test case design methods and is a refinement to equivalence partitioning. Boundary conditions are those situations directly on, above, and beneath the edges of input equivalence classes and output equivalence classes. In boundary analysis one or more elements must be selected to test each edge Error Guessing: Based on past experience, test data can be created to anticipate those errors that will most often occur. Using experience and knowledge of the application, invalid data representing common mistakes a user might be expected to make can be entered to verify that the system will handle these types of errors Test Case Template

- 23. Types of Tests Functional Testing: The purpose of functional testing is to ensure that the user functional requirements and specifications are met. Test conditions are generated to evaluate the correctness of the application

- 24. Types of Tests Functional Testing: The purpose of functional testing is to ensure that the user functional requirements and specifications are met Structural Testing designed to verify that the system is structurally sound and can perform the intended tasks. Audit and Controls testing: verifies the adequacy and effectiveness of controls and ensures the capability to prove the completeness of data processing results Installation Testing: The purpose of this testing is ensure All required components are in the installation package The installation procedure is user-friendly and easy to use The installation documentation is complete and accurate

- 25. Inter-system Testing :. Interface or inter-system testing ensures that the interconnections between applications function correctly Parallel Testing :Parallel testing compares the results of processing the same data in both the old and new systems Parallel testing is useful when a new application replaces an existing system Regression Testing: Regression testing verifies that no unwanted changes were introduced to one part of the system as a result of making changes to another part of the system Usability Testing: The purpose of usability testing is to ensure that the final product is usable in a practical, day-to-day fashion

- 26. Ad-hoc testing: Testing carried out using no recognized Test Case Design Technique. Here the testing is done by the knowledge of the tester in the application and he tests the system randomly with out any test cases or any specifications or requirements. Smoke Testing: Smoke testing is done at the start of the application is deployed Smoke testing is conducted to ensure whether the most crucial functions of a program are working. It is main functionality oriented test Smoke testing is normal health check up to a build of an application before taking it to testing in depth Sanity Testing: Sanity test is used to determine a small section of the application is still working after a minor change, focuses on one or a few areas of functionality. Once a new build is obtained with minor revisions

- 27. Backup and Recovery testing: Recovery is the ability of an application to be restarted after failure. The process usually involves backing up to a point in the processing cycle where the integrity of the system is assured and then re-processing the transactions past the original point of failure Contingency testing: is to verify that an application and its databases, networks, and operating processes can all be migrated smoothly to the other site Performance Testing: Performance Testing is designed to test whether the system meets the desired level of performance in a production like environment Security Testing Security of an application system is required to ensure the protection of confidential information in a system and in other affected systems is protected against loss, corruption, or misuse; either by deliberate or accidental actions Stress / Volume Testing : Stress testing is defined as the processing of a large number of transactions through the system in a defined period of time • The production system can process large volumes of transactions within the expected time frame • The system architecture and construction is capable of processing large volumes of data

- 28. RTM/Test Coverage Matrix A worksheet used to plan and cross check to ensure all requirements and functions are covered adequately by test cases RTM

- 30. • New: When a bug is found for the first time, the software tester communicates it to his/her team leader (Test Leader) in order to confirm if that is a valid bug. After getting confirmation from the Test Lead, the software tester logs the bug and the status of ‘New’ is assigned to the bug. • Open: Once the developer starts working on the bug, he/she changes the status of the bug to ‘Open’ to indicate that he/she is working on it to find a solution. Fixed: Once the developer makes necessary changes in the code and verifies the code, he/she marks the bug as ‘Fixed’ and passes it over to the Development Lead in order to pass it to the Testing team. • Retest: After the bug is fixed, it is passed back to the testing team to get retested and the status of ‘Retest’ is assigned to it. • Closed: After the bug is assigned a status of ‘Retest’, it is again tested. If the problem is solved, the tester closes it and marks it with ‘Closed’ status.

- 31. Reopen: If after retesting the software for the bug opened, if the system behaves in the same way or same bug arises once again, then the tester reopens the bug and again sends it back to the developer marking its status as ‘Reopen’. Rejected: If the System Test Lead finds that the system is working according to the specifications or the bug is invalid as per the explanation from the development, he/she rejects the bug and marks its status as ‘Rejected’. Deferred: In some cases a particular bug stands no importance and is needed to be avoided, that time it is marked with ‘Deferred’ status.

- 32. Severity & Priority • Severity: Severity determines the defect's effect on the application. Severity is given by Testers • Priority: Determines the defect urgency of repair. Priority is given by Test lead or project manager or Senior Testers

- 33. Severity 1: A critical problem for which there is no bypass and testing cannot continue. A problem is considered to be severity 1 when:It causes the system to stop (e.g. no response to any command, no user display, etc.). The system cannot be restarted after a crash. It disables user access to the system. It causes function aborts, data loss or corrupts data. It corrupts or damages the operating system, hardware or any associated applications. Severity 2:A high severity problem where some testing can continue. A problem is considered to be severity 2 if: It affects the operation of more than two functions. The operation of the function permanently degrades performance outside the required parameters specified. Severity 3: A medium severity problem which does not prevent testing from continuing. A problem is considered severity 3 if: It causes the function to abort with incorrect or malicious use. It affects a subset of a function but does not prevent the function from being performed. It is unclear documentation which causes user misunderstanding but does not cause function aborts, data loss or data corruption.

- 34. • Severity 4:An low severity problem which does not prevent testing from continuing. A problem is considered to be incidental if: It is a spelling or grammatical error. It is a cosmetic or layout flaw. Priority 1:The problem has a major impact on the test team. Testing may not continue. The priority of the fix is urgent and immediate action is required. Priority 2:The problem is preventing testing of an entire component or function. Testing of other components or functions may be able to continue. The priority is high and prompt action is required.

- 35. Priority 3:The test cases for a particular functional matrix (or sub- component) cannot be tested but testing can continue in other areas. The priority is medium. Priority 4:Some of the function tested using a test case will not work as expected but testing can continue. The priority is low. Examples: 1. High Severity & Low Priority : For example an application which generates some banking related reports weekly, monthly, quarterly & yearly by doing some calculations. If there is a fault while calculating yearly report. This is a high severity fault but low priority because this fault can be fixed in the next release as a change request. 2. High Severity & High Priority : In the above example if there is a fault while calculating weekly report. This is a high severity and high priority fault because this fault will block the functionality of the application immediately within a week. It should be fixed urgently.

- 36. 3. Low Severity & High Priority : If there is a spelling mistake or content issue on the homepage of a website which has daily hits of lakhs. In this case, though this fault is not affecting the website or other functionalities but considering the status and popularity of the website in the competitive market it is a high priority fault 4. Low Severity & Low Priority : If there is a spelling mistake on the pages which has very less hits throughout the month on any website. This fault can be considered as low severity and low priority.

- 37. Test Summary Report What was tested? What are the results? What are the recommendations? System Test Report

- 38. • The Basic Structure that has to be followed for developing any Software/project including Testing process is called as Testing Methodology.